There’s nothing more annoying than seeing a spinning wheel or having an application close when you’re trying to support a customer.

The thing is, while this is an annoyance for your end-users, it can represent a significant problem for your business’s financial bottom-line. So, if you’re a business decision-maker or director, understanding latency and downtime is crucial to understanding what makes your business money.

What is the latency?

Latency is a measure of delay between asking your application or system to do something – and the application or system actually performing that action.

Typing the name of website into the address bar at the top of your browser window is a good example. When you hit enter, the application you’re using sends a request to the server that holds that website’s information – and, in return, that website sends information that appears before your eyes.

On this site, you can measure the latency that’s occurring across your business network quite easily. The higher the latency figure, the slower the response. Latency in itself is irritating and can slow your processes down – but it’s a more significant problem when it causes downtime.

What is downtime?

Downtime is the term used to describe any instance when you cannot access one or more of your systems. Application downtime is damaging – as it generally means you’re without one of your business tools, but, more damaging is network downtime; an instance where your entire IT system cannot be accessed.

Although the figure will obviously vary from company to company, it’s suggested on the20.com that on average, downtime costs businesses somewhere in the region of $5,600 for every minute lost. If that sounds like a lot, you’ve got to remember that this figure includes huge, multi-national businesses – but don’t underestimate how much it would cost you. As well as missed opportunities – there’s the minute-by-minute cost of having a workforce that isn’t doing anything – a cost which is likely to be large.

How does latency lead to downtime?

So, how exactly do slow applications end up with companies losing millions of dollars?

Well, although latency isn’t the only reason downtime occurs, it can have a big impact – and it’s all about how a network handles delays.

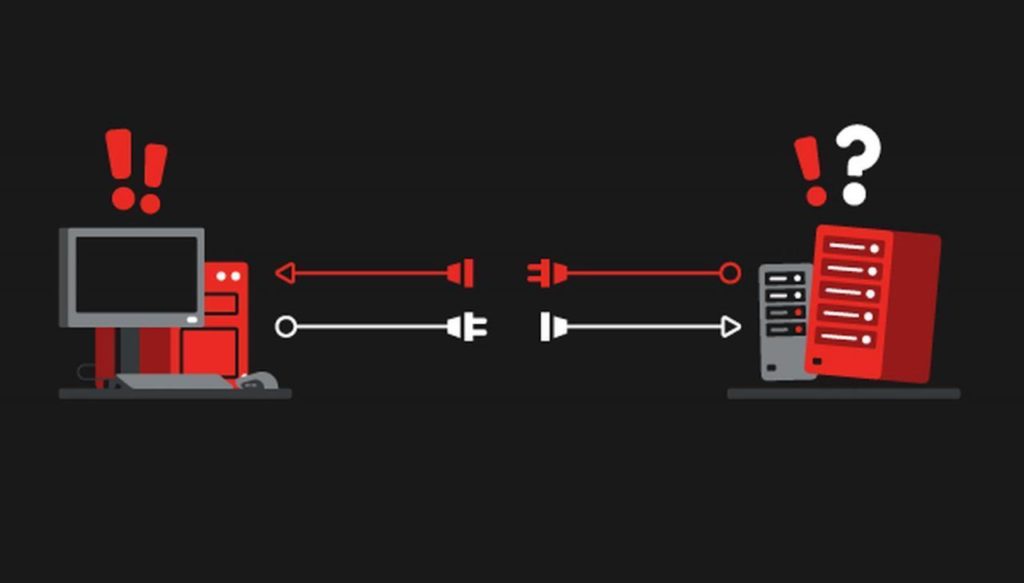

Latency occurs in the first instance because of a speed that two application decide on between themselves when a request for information is made between the two. When you end-user tries to access information on one of your systems, a tiny data packet is sent to and from the programme that’s handling that request – and the speed at which that transaction takes place sets the pace for a much larger transmission of data between the two.

As an example, let’s say you’re about to make a video call to a colleague. As you click to call, this speed is set, and as long as it’ll support the call and the ensuing data will cross the network at that speed as you have your discussion.

All working smoothly so far? Good – that means there’s no significant latency – but, when someone else tries to make a call and the network begins to struggle to cope with the amount of data that’s needed, your call starts to stutter and drop in quality.

This is happening because the speed that was initially agreed between the two applications isn’t being met – and it’s not being met because there’s too much traffic on the highway. This ‘traffic’ is actually referred to as ‘throughput’ and, if the throughput tries to exceed what the highway is capable of handling (i.e. its bandwidth) then, just like on a real highway, traffic starts to backup – causing latency and slowing things down.

This is double-trouble though – because now, when other applications look to set transfer speeds, they’re going to be very slow – which will mean ongoing data transfers across your systems slow down.

The thing is, traffic on this highway can be easily dealt with – and your network will deal with it promptly – by discarding packets of data in the bottleneck. So, when that call you’re on starts to drop audio or you find the picture is breaking up – it’s because you’re losing data in an effort to maintain the call. If this slow speed continues and data keeps getting dropped, eventually the receiving application won’t know what to do with the partial data it receives – and your call will fail.

What does this mean for your systems?

So, your Skype call drops – annoying. But what happens when it’s your customer management system? Your cloud-based financial systems? Or your credit card processing network?

That’s when things start to get serious. Without mission-critical systems like these, you’re potentially going to start losing money pretty quickly. Customer and end-user inconvenience can only be smoothed over with the promises of call-backs for so long – and who knows when then big client call or order is going to come in?

What can you do about latency?

There’s good news and bad news when it comes to handling latency. The bad news is that any latency issues are almost certainly coming from your network – but the good news is, that means you can do something about them.

Latency isn’t something you’re likely to be able to deal with in-house – unless you’ve got an IT network team who understand the intricacies of network design and performance optimization. In reality, you’re likely to need an external company to come in, get a good understanding of your systems and your business requirements – before adjusting your network to operate in a way the puts uptime at the core of what you’re doing.

Getting to grips with latency should be considered an investment – an insurance policy against downtime. A good network provider won’t just put things right and step away – leaving you to fend for yourself – they’ll commit to an uptime figure – and they’ll make sure monitoring tools are in place to ensure you get the performance you need from your system.

Latency can be a serious inconvenience when it slows you down – but that’s exactly the right time to jump on the problem – because prevention is always better than the cure when downtime is involved.